So far, we saw how a Simple Reflex Agent works — quick, but a bit forgetful! 😅

It reacts instantly but has no memory and no sense of what happened before.

Imagine if your vacuum robot cleans the same spot again and again because it can’t remember where it’s been — frustrating, right?

💭 Now imagine if we give it a tiny bit of memory — so it can remember what it saw earlier and understand what the world looks like now, even when it can’t see everything at once.

That’s where our next hero enters — the Model-Based Reflex Agent! 🚀

This agent is just like the previous one but smarter.

It still reacts based on rules, but now it also keeps track of what happened before using an internal model of the world.

Let’s see how this slightly brainier agent works 👇

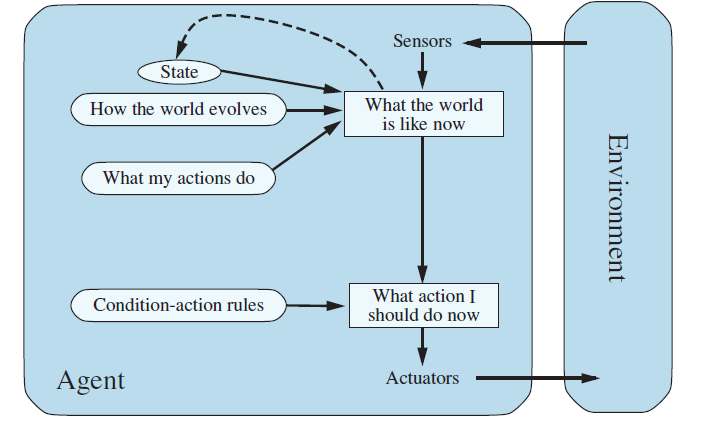

diagram shows how a Model-Based Reflex Agent works. Let’s break it down clearly

How It Works (Step-by-Step Explanation)

It’s an improved version of a Simple Reflex Agent.

- Environment → Sensors

The environment (real world) sends data to the agent — like road, cars, signals, weather, etc.

The sensors (camera, radar, GPS) collect current information — for example:

“A red light is visible ahead,” or “There’s a car in front.”

- Sensors → “What the world is like now” (Current Percept)

The sensor input tells the agent what it currently sees.

But since the car cannot see everything (like behind a truck), it has incomplete knowledge.

- Internal State + current Information → Updated View

Now, the agent updates its internal state (memory) by combining:

- What it senses now

- What it already knew before (previous internal state)

✅ Example:

Last second, the car detected another vehicle on the left.

Now it can’t see it (maybe blocked by a truck).

The agent remembers that car is still there.

- “How the world evolves”

This means

How things in the environment change on their own, even when the agent does nothing.

It’s about natural changes in the world that are not caused by the agent’s actions.

✅ Examples:

The traffic light changes from green → yellow → red by itself.

It starts raining, so the road becomes slippery.

A pedestrian crosses the road — the agent didn’t cause it.

So this block helps the agent predict how the world might change over time automatically.

- What my actions do

This means:

“How the agent’s own actions change the environment.

✅ Examples:

If the car turns the steering wheel, the car moves right.

If it presses the brakes, the car slows down.

This block helps the agent understand the effects of its own actions — so it can predict what will happen if it acts a certain way.

A Model-Based Agent is like a human with memory —

It doesn’t just act; it remembers where it’s been!

- Condition–Action Rules → Decision

- Now, using the updated internal state, the agent applies its rules:

- If obstacle ahead → slow down

If road clear → accelerate

What action I should do now” → Actuators → Environment

The agent decides the best action and sends it to its actuators (wheels, brakes, steering).

The environment changes as a result (the car slows down or turns).

- Continuous Feedback Loop

The process repeats continuously — the agent keeps sensing, updating, deciding, and acting.

That’s how it “reacts intelligently” even when it can’t see the full picture.

Example: Self-Driving Car

Let’s connect it:

- Sensors: Cameras, radar, GPS

- Internal State: Knows previous car positions, speed, traffic light status

- Transition Model: “If I accelerate, I move forward; if I turn right, I change lanes.”

- Sensor Model: “If I see red lights, the car ahead is braking.”

- Action: Slow down, stop, or steer based on updated state.

🚗 Complete Example — Self-Driving Car Flow Summary:

StepDiagram Block1Sensors → “What the world is like now”Car camera sees “Green Light”2“How the world evolves”The car knows lights change every few seconds → predicts “It will turn Yellow soon”3“What my actions do”If I speed up → I might cross before red, but risk an accident4Internal State UpdatedAgent stores: “Light might change soon; keep moderate speed.”5Condition–Action RuleIf light turns yellow → slow down.6ActuatorsThe car starts braking. |

|---|

In Short:

| Component | Purpose |

| Sensors | Sense the environment |

| Internal State | Remember what’s not currently visible |

| Transition Model | Know how the world changes |

| Sensor Model | Know how sensors interpret the world |

| Condition–Action Rules | Decide what to do |

| Actuators | Execute the action |

How it different from self reflex agent?

Adds 3 new boxes/connections on top of the simple reflex one:

| 🔲 New Box | 🧠 Meaning / Function | 💡 Example |

| State (Internal State) | Stores what the agent remembers about the world — helps it keep track of things it can’t currently see. | Car remembers there was a vehicle behind the truck even if it’s hidden now. |

| How the world evolves | The agent’s Transition Model — knowledge of how the world changes with time or actions. | “If I turn the steering wheel right → car turns right.” “If it rains → roads get slippery.” |

| What my actions do | Knowledge of the effect of its own actions on the world. | “If I press the brake → car slows down.” “If I move forward → distance to obstacle decreases.” |

➡️ These 3 new boxes connect back to the “What the world is like now” block,

updating it based on memory + new sensor input.

Advantages of Modal based reflex agent

| No. | Advantage | Explanation / Example |

| 1️⃣ | Handles partial observability | Works even when it can’t see the whole environment by remembering past information. |

| 2️⃣ | Maintains internal memory (state) | Stores past percepts to make smarter and more informed decisions. |

| 3️⃣ | Understands how actions affect the world | Uses a transition model to predict results of its actions (e.g., turning the wheel → car turns right). |

| 4️⃣ | Makes better decisions than simple reflex agents | Combines current perception with past knowledge instead of reacting blindly. |

| 5️⃣ | Performs well in dynamic, real-world environments | Suitable for changing conditions like driving or cleaning where the environment isn’t fixed. |

Limitations of Model-Based Reflex Agent

No sense of direction or purpose

- The model-based agent knows what is happening, but doesn’t know what it ultimately wants.

Example: A self-driving car can follow traffic rules and avoid collisions —

but it doesn’t know where to go (its destination).

➡️ We need goals to give it direction.

Cannot compare or choose between alternatives

When multiple actions seem okay, it has no way to decide which one leads to a better outcome.

Example: At a junction, it can go left, right, or straight —

but without a goal, it doesn’t know which direction is correct.