Introduction

Have you ever wondered —

“If a machine can calculate numbers, can it also think like a brain?”

That single question gave birth to one of the greatest scientific revolutions of our time — Artificial Intelligence (AI).AI didn’t start with robots or computers that talk.

It started with two curious minds in the 1940s who wanted to understand how the human brain thinks — and whether a machine could do the same.

1943 — McCulloch & Pitts: The First Neuron Model

In 1943, Warren McCulloch, a neuroscientist, and Walter Pitts, a mathematician, did something extraordinary.

They looked at the human brain and said:

“Let’s turn neurons into math!”

They built the first mathematical model of a neuron, showing that a brain cell could be represented as a simple switch — ON or OFF, just like the circuits in a computer.

Using logic gates (AND, OR, NOT), they demonstrated how combinations of these artificial neurons could make decisions — forming the basic idea of a neural network.

Why This Was Important

Before this, computers could only calculate numbers.

After McCulloch and Pitts, scientists realized that computers could also simulate thinking — by connecting artificial neurons like the brain connects biological ones.

In short, they:

- Modeled brain cells using logic (ON/OFF system).

- Created the first neuron model — the base of all future AI systems.

- Proved that thinking can be represented mathematically.

Impact:

This became the first mathematical foundation for thinking machines, and it laid the groundwork for everything AI does today — from recognizing your face to predicting your next movie on Netflix!

1949 — Donald Hebb: “Neurons that fire together, wire together”

After McCulloch and Pitts showed that neurons could be modeled with math, another scientist took the idea one step further — he wanted to explain how the brain learns.

Meet Donald Hebb, a Canadian psychologist.

In 1949, he proposed a simple but powerful rule about learning in the brain.

He said:

“When two neurons activate together often, their connection becomes stronger.”

Understanding Hebb’s Idea

Think of your brain as a giant network of tiny lightbulbs (neurons).

Whenever you practice something — like riding a bicycle or typing on a keyboard — certain “lightbulbs” in your brain turn on together again and again.

Over time, the connections between those bulbs get stronger — meaning you can now perform that task automatically without much thought.

That’s what Hebb meant when he said:

“Neurons that fire together, wire together.”

It’s like saying — the more often two friends talk, the stronger their friendship becomes.

In the same way, the more two neurons “talk,” the stronger their connection grows.

Why This Was Important

Hebb’s idea was revolutionary because it explained how learning happens — not through memorization, but through strengthening connections between active neurons.

It became the foundation for how machines learn patterns too.

In AI terms:

- When two artificial neurons “fire together” (both are active during learning),

➡️ the connection (weight) between them is increased. - This is how a neural network gradually learns from examples — by reinforcing the useful paths.

Example: Learning to Ride a Bicycle

At first, you wobble, lose balance, and fall — but your brain is learning.

Each time you try, your balance neurons and movement neurons fire together.

Soon, those connections become strong enough that you can ride smoothly — without even thinking about it.

That’s Hebbian learning — and it’s the same idea used in AI training today!

Impact

Hebb’s theory became the foundation of learning for both biological brains and artificial networks.

His work directly inspired Hebbian learning — an early concept that later evolved into the weight adjustment rules used in modern neural networks.

In short:

McCulloch and Pitts showed how a brain could think.

Hebb showed how a brain could learn.

1950 — Alan Turing: “Can Machines Think?”

After scientists discovered how neurons think (McCulloch & Pitts) and how the brain learns (Hebb), a brilliant mathematician named Alan Turing asked a new and bold question:

“If the human brain can think, can a machine think too?”

This question completely changed the direction of science — and gave birth to the dream of Artificial Intelligence.

Who Was Alan Turing?

Alan Turing was a British mathematician and computer scientist.

During World War II, he helped break the Nazi code (Enigma machine) — saving millions of lives.

But even after the war, Turing wasn’t satisfied just solving problems; he wanted to understand the nature of intelligence itself.

What He Did

In 1950, Turing wrote a famous paper titled “Computing Machinery and Intelligence.”

In it, he asked a question that no one had ever asked before:

“Can machines think?”

He didn’t just stop there — he proposed a way to test whether a machine is truly intelligent.

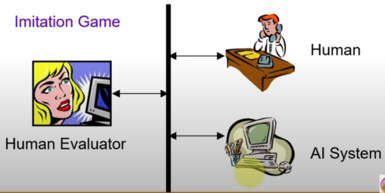

The Turing Test

Turing said:

If a human talks to a machine through a computer,

and cannot tell whether the responses come from a human or a machine —

then the machine is intelligent.

This idea became known as the Turing Test — the first real test for Artificial Intelligence.

When you chat with an AI like ChatGPT, and it replies so naturally that you forget it’s not a real person —

that’s basically Turing’s Test in action!

Turing imagined this more than 70 years ago, long before modern computers existed.

Why It Was Important

Turing gave AI its philosophical foundation — the goal of making machines that can think, reason, and communicate like humans.

He turned a dream into a scientific question — one that scientists could now explore and measure.

1956 — The Dartmouth Conference: The Birth of AI

After Alan Turing asked, “Can machines think?”, a group of brilliant scientists decided to find out.

They wanted to turn this question into a real research field — and that’s how Artificial Intelligence was born.

Who Were They?

The team included:

- John McCarthy — Mathematician who later became known as the “Father of Artificial Intelligence.”

- Marvin Minsky — Cognitive scientist who built early intelligent machines.

- Claude Shannon — The father of Information Theory.

- Herbert Simon & Allen Newell — Researchers who created some of the first AI programs.

What They Did

In 1956, they organized a summer workshop at Dartmouth College, USA.

This meeting was called the Dartmouth Conference, and it was the first official gathering of scientists who wanted to make machines “think.”

John McCarthy proposed the name “Artificial Intelligence” — and it stuck!

That’s why we say:

“Dartmouth, 1956 — the place where AI got its name and identity.”

Why It Happened

These scientists believed that:

“Every aspect of learning or intelligence can, in principle, be described so precisely that a machine can simulate it.”

In simple terms —

they thought if we can describe how humans think, we can teach computers to think the same way.

Example

Think of the Dartmouth Conference as the “birth certificate” of Artificial Intelligence.

Just like a baby gets a name and identity when born —

AI got its name (“Artificial Intelligence”) and started its life as a new field of science here.

Key Takeaway

- The Dartmouth Conference (1956) marks the official birth of Artificial Intelligence.

- John McCarthy is called the Father of AI for naming the field and guiding its vision.

- This event turned the idea of AI into a real scientific mission.

1956–1969: The Early Enthusiasm — “AI Learns to Walk”

After the Dartmouth Conference, scientists were filled with excitement.

They had named Artificial Intelligence — now they wanted to make it work!

This period is known as the Early Years of AI, when the first programs that could think, reason, and learn were created.

Logic Theorist (1956)

Who: Allen Newell and Herbert A. Simon

What they did: They created a computer program called Logic Theorist, which could solve mathematical logic problems.

It proved theorems from a famous book called Principia Mathematica — something only trained mathematicians could do before.

Why It Mattered

This was the first AI program that could reason logically, step by step —

just like a human solving a math problem.

It showed that computers could use logic to make decisions, not just follow instructions blindly.

Impact

- Logic Theorist is considered the first successful AI program.

- It proved that reasoning could be done by a machine.

- It inspired later programs in problem-solving and decision-making.

Arthur Samuel’s Checkers Program (1959)

Who: Arthur Samuel (IBM Scientist)

What he did: He created a computer program that played checkers (draughts) — and it got better the more it played!

Instead of being told every move, the program learned from experience — a concept we now call Machine Learning.

How It Worked

Every time the computer played a game:

- It remembered which moves led to a win or loss.

- It adjusted its future strategy based on those results.

So, the more it played, the smarter it became — just like humans improve with practice!

Impact

- This was the first self-learning AI program.

- It introduced the idea that machines can learn from data and feedback instead of fixed instructions.

- It laid the foundation for modern machine learning and even today’s AI systems like ChatGPT or self-driving cars.

1958 — John McCarthy and LISP: The Language of AI

After AI began to “walk” with early programs like Logic Theorist and Checkers, it needed something important —

a language to think and communicate in.

That’s when John McCarthy, often called the Father of Artificial Intelligence, created LISP, the first AI programming language.

What Is LISP?

- LISP stands for “List Processing Language.”

- It allowed computers to work not only with numbers, but also with symbols, logic, and reasoning — the things human thinking is based on.

- Before LISP, computers only did mathematical calculations.

- But AI needed to deal with words like if, then, human, machine, etc. — and LISP made that possible.

Example

Imagine trying to teach a robot about emotions.

You can’t just use numbers — you need words like “happy,” “sad,” or “angry.”

LISP made this kind of symbolic reasoning possible —

it was like giving AI a new language to think in!

Even today, LISP is still used in AI research, especially in areas involving reasoning and symbolic logic.

Impact

- It became the main AI language for decades.

- Most early AI systems and research tools were built using LISP.

- It set the foundation for later AI programming languages like Prolog and Python (used in modern AI).

The First AI Winter — “AI Fails Its First Exam” (1970s)

After the early excitement of the 1950s and 60s — when AI could play games, prove math theorems, and even “think” using LISP —

scientists started to dream big.

They believed machines would soon understand language, see like humans, and reason like experts.

But then… reality hit hard.

What Went Wrong?

AI was brilliant in small, controlled situations — like solving logical puzzles —

but it completely failed when faced with the real world.

Let’s see why 👇

- Computers were too slow and expensive.

The hardware of the 1970s couldn’t handle complex AI algorithms or large data. - AI programs were too limited.

They could play checkers or prove equations — but couldn’t understand normal human language or recognize images. - Funding dried up.

Governments and research labs lost patience because AI wasn’t delivering on its promises.

So, money and support for AI research stopped.

The Famous Example: The Perceptron Problem

Two famous scientists — Marvin Minsky and Seymour Papert —

studied an early neural network model called the Perceptron.

They showed that the perceptron couldn’t even solve simple shape problems —

like telling the difference between an “X” and an “O.”

That discovery crushed the dream of neural networks for decades.

Everyone thought — “AI can’t really learn complex patterns.”

Result: The First AI Winter

- Research slowed down.

- Funding stopped.

- Many scientists moved to other fields.

It was like AI had failed its first big exam.

Impact

The First AI Winter taught scientists a valuable lesson —

that real intelligence requires more than logic and math.

Machines needed better memory, learning ability, and faster hardware.

It wasn’t the end of AI — it was a pause for reflection.

Expert Systems — “AI Gets a Job” (1980s)

After the winter, AI came back in a new form.

Scientists said:

“Instead of making AI think like humans, let’s make it act like experts.”

Expert System = Knowledge + Rules + Decision

These systems used “if–then” rules to make decisions.

Examples:

DENDRAL (1969): Helped chemists identify molecules.

Took input data — chemical formula, mass spectrometry data, etc.

Applied expert rules — logical steps written by real chemists.

Gave output — the most likely molecular structure.

MYCIN (1970s): Diagnosed blood infections.

MYCIN (1970s)

MYCIN was a medical AI that diagnosed blood infections.

Doctors would type symptoms, and MYCIN replied with possible diseases and medicines!

Example rule:

IF patient has fever AND sore throat → THEN possible strep infection.

XCON (1980s): Helped companies configure computers.

Instead of an engineer checking manuals for hours, XCON would apply rules like:

IF the system has 2 CPUs → THEN add this special cable and cooling system.

IF memory > 64MB → THEN use power supply model XYZ.”

Companies made millions using these systems.

But…

They couldn’t learn new rules on their own.

The Problem

They couldn’t learn new information on their own.

→ Every new situation needed a human to add more rules.

The systems became huge and hard to update — thousands of rules!

When the experts who built them left or retired, nobody could maintain the system.

So companies stopped investing — and governments again reduced research funding.

When experts quit, systems became outdated → Second AI Winter

But some scientists didn’t give up. They said — ‘What if computers could learn by themselves?’

This idea led to the comeback of Neural Networks, powered by a powerful learning method called Backpropagation.

in the mid-1980s,

They introduced Backpropagation, a way for AI to learn from its mistakes.

This changed everything — AI could finally improve by itself without waiting for humans to add new rules!”

Geoffrey Hinton and his team brought back Neural Networks — systems inspired by the human brain.

The Comeback! (1986–1990s)

Neural Networks Return — “AI Learns to Learn” (1986–1990s)

Scientists rediscovered Backpropagation — a way to train neural networks.

Who: Geoffrey Hinton, David Rumelhart, James McClelland.

What: AI could now adjust its internal “weights” to learn from mistakes.

Example:

AI looks at handwritten digits (0–9), guesses wrongly, then corrects itself next time — just like how you learn spelling after mistakes.

Impact:

This was a major comeback for neural networks — the start of modern AI and deep learning

Earlier AI worked only when everything was clear — like yes/no, true/false.

But the real world isn’t like that!

Doctors aren’t 100% sure when diagnosing, weather forecasts give chances, and even you guess sometimes based on probability.

So, scientists realized — AI must also handle uncertainty like humans do.

Bayesian Networks help AI reason when it doesn’t have complete information.”

“For example, a Doctor AI doesn’t know exactly why you have a fever —

but it can calculate:

70% chance of flu

30% chance of infection.”

It’s like when you forget your umbrella. You check the sky — if it’s cloudy, there’s a 70% chance it might rain, so you take your umbrella anyway.”

“AI started thinking like that — smart guessing using probability.

Reinforcement Learning — Learning by Experience

At the same time, another idea grew:

“What if AI could learn by trying, failing, and improving — just like humans?”

“Reinforcement Learning teaches AI by trial and error.

It gets rewards for good actions and punishments for bad ones.”

Type: Learning by Trial & Error (no correct label)

How it learns:

There’s no correct answer given — instead, the AI learns what’s good or bad based on rewards or penalties after each action.

Example:

A robot tries to walk.

It doesn’t know the correct leg movement.

It just moves — if it falls, it gets -1 point; if it walks a few steps, it gets +1.

Over time, it figures out the best way to move to get more rewards.

Think of it as:

“Teacher doesn’t tell the answer — just says good job or try again!”

“AI Becomes Smarter — Learning from Uncertainty & Experience”🎲

Probabilistic Reasoning & Reinforcement Learning (1990s–2000s)

AI realized:

“The world isn’t certain — so I must deal with probabilities.”

Who: Judea Pearl.

What: Introduced Bayesian Networks — a way for AI to reason with uncertainty.

Example:

Doctor AI:

If fever → 70% chance of flu, 30% chance of infection.

At the same time, Reinforcement Learning grew — AI learns by trial and error and gets rewards or punishments.

In this era, AI stopped being a rule-follower and became a learner —

it learned to think under uncertainty and improve from experience.

Big Data Era — “AI Gets Internet Power” (2000s–2010s)

The internet exploded — billions of pictures, texts, and videos were available.

AI now had data fuel for learning.

Companies like Google, Facebook, and Amazon used AI for:

- Search results

- Recommendations

- Targeted ads

Example:

You search for “Nike shoes” once, and suddenly see shoe ads everywhere — that’s machine learning analyzing your behavior!

Impact:

AI became part of daily life.

Have you ever searched for a T-shirt or shoes online…

and then seen ads for it everywhere — on Instagram, YouTube, or Facebook?”

That’s AI working with Big Data — it has become smart because of the Internet!”

What Changed in the 2000s?

Before the 2000s, computers didn’t have enough data to learn from.

But then:

The Internet exploded — billions of people started:

Uploading photos

Writing blogs

Watching videos

Buying things online

Suddenly — there was a mountain of data for AI to learn from.

AI became like a student who finally got access to all the world’s textbooks!

What is “Big Data”?

Simple definition:

“Big Data means huge amounts of data that are too large for humans to analyze manually — so we use AI to find patterns.”

Example:

Millions of people watch YouTube daily.

AI studies what you watch, how long you watch, and what you skip.

Then it recommends exactly what you might like next — that’s Big Data + AI working together.

Step 3: How AI Used This Data (in Companies)

Example 1: Google Search

When you type a few words — AI predicts what you’re looking for using past search patterns from millions of users.

“You type ‘best phone’, it auto-suggests ‘best phone 2025 under ₹20,000’ — that’s AI analyzing what most people searched.”

Example 2: Amazon Recommendations

AI looks at what you buy and what similar users buy, then recommends items.

“If you buy a laptop, AI suggests a laptop bag, mouse, or screen cleaner.”

That’s called Recommendation Systems — powered by Big Data.

Example 3: Facebook / Instagram Ads

AI studies your likes, follows, and searches.

So when you look for Nike shoes once,

→ AI remembers, connects it with other users who like similar things,

→ and shows you shoe ads again and again 👟.

“AI is like that friend who never forgets what you like!”

Step 4: Why It’s Important

Because of Big Data:

AI became smarter and more accurate.

It started learning from real-world behavior, not just lab data.

It could personalize everything — your music, shopping, news, and even social feeds.

“That’s why every person’s YouTube ‘For You’ page is different — AI has learned your preferences!”

Deep Learning — “AI Becomes a Superstar” (2010s–Now)

Breakthrough: Deep Neural Networks (many layers).

They can recognize images, text, and voices — almost like humans.

Key moments:

- 2012: Geoffrey Hinton’s team wins ImageNet with deep learning.

- 2016: AlphaGo beats world champion Lee Sedol in the game Go.

- 2022–23: ChatGPT, DALL·E, Midjourney — AI that creates art, text, and music.

Example:

Say “Draw a cat flying on pizza” → AI draws it.

Ask “Explain AI history” → ChatGPT answers!

Impact:

This is the age of Generative AI — AI that creates new content.

What is Deep Learning?

“Deep Learning is like a brain-inspired version of AI — made of many layers called neural networks.”

Each layer learns something:

The first layer learns basic shapes or letters

Middle layers learn features like faces, objects, or words

Final layer gives the answer or prediction

💬 Simple analogy:

“It’s like a school — each class (layer) learns a bit more, and the final class (layer) gives the final answer.”

Step 3: Why it’s called “Deep”

Say:

“Earlier neural networks had 1 or 2 layers.

But now we can build networks with dozens or hundreds of layers — that’s why it’s called Deep Learning!”

More layers = more learning = smarter AI.

Step 4: Big Breakthrough Moments

Year Event What Happened 2012 Geoffrey Hinton’s team wins ImageNetDeep learning beats all old methods in recognizing images 2016AlphaGo beats Lee SedolAI beats the world champion in the world’s hardest game, Go! 2022–23ChatGPT, DALL·E, MidjourneyAI starts writing, drawing, and composing music

“AI is no longer just solving problems — it’s creating new things! That’s why we call this the Generative AI Era.”

“From 1950s simple logic to today’s AI that can talk, draw, and compose —

AI has grown from thinking to creating.

And you, the next generation, will shape what comes next!”

History Summary

| Era / Year | Key Scientists | Major Contribution / Invention | Meaning / Impact |

| 1943 | Warren McCulloch & Walter Pitts | Created the first mathematical model of a neuron (Artificial Neuron). | Showed that the brain could be simulated using logic and math — foundation of Neural Networks. |

| 1949 | Donald Hebb | Proposed Hebb’s Rule – “Neurons that fire together, wire together.” | Explained how learning happens in the brain; inspired Hebbian learning in AI. |

| 1950 | Alan Turing | Wrote “Computing Machinery and Intelligence” and proposed the Turing Test. | Asked, “Can machines think?” — gave AI its philosophical foundation. |

| 1956 | John McCarthy, Marvin Minsky, Claude Shannon, Herbert Simon | Organized the Dartmouth Conference and coined the term “Artificial Intelligence.” | The birth of AI as a field of study. |

| 1956–1969 | Allen Newell & Herbert Simon, Arthur Samuel, John McCarthy | – Logic Theorist (first reasoning program)- Samuel’s Checkers (machine learning)- LISP (AI programming language) | AI learned to reason and learn. LISP became the main AI programming language. |

| 1970s | — | First AI Winter (loss of funding and interest). | AI failed on real-world problems — computers were slow and limited. |

| 1980s | Edward Feigenbaum, Bruce Buchanan, John McCarthy | Rise of Expert Systems (DENDRAL, MYCIN, XCON). | AI used if–then rules to act like experts — successful in industries but couldn’t learn new rules → Second AI Winter. |

| 1986–1990s | Geoffrey Hinton, David Rumelhart, James McClelland | Rediscovered Backpropagation — method to train Neural Networks. | Neural Networks could now learn from mistakes — beginning of modern AI. |

| 1990s–2000s | Judea Pearl | Developed Bayesian Networks (probabilistic reasoning).Rise of Reinforcement Learning (learning from rewards). | AI began to handle uncertainty and learn from experience. |

| 2000s–2010s | Google, Facebook, Amazon engineers | Big Data Era — AI trained using Internet data (images, videos, text). | AI powered search engines, ads, and recommendations — became part of daily life. |

| 2010s–Now | Geoffrey Hinton, DeepMind (AlphaGo), OpenAI (ChatGPT, DALL·E) | Deep Learning & Generative AI. | AI recognizes images, text, speech and creates art, music, and text — the AI Superstar Era. |