Alright, so our Utility-Based Agent is already quite smart — it knows what makes it “happy” and chooses the best action accordingly. But… what if one day, the environment changes?

Imagine this —

Your Google Maps says, “Take a left for the fastest route.” 🚗

You take the left… and boom — the road is under construction! 😩

Now, what should the agent do? Should it keep making the same mistake every day?

Of course not! It needs to learn from experience.

That’s where our next hero enters the scene — 🧠 The Learning Agent!

This agent doesn’t just act smart — it actually becomes smarter over time.

It observes what works, what fails, and improves its decisions just like humans do after trial and error.

So, you can tell your students:

“Till now, our agents were like students who study only from the book.

But the Learning Agent is like the student who experiments, makes mistakes, learns from them, and scores even better next time!” 😄

Now let’s dive into how this Learning Agent works and why it’s the most powerful type among all! 🚀

It learns how to perform better in an environment through four key components:

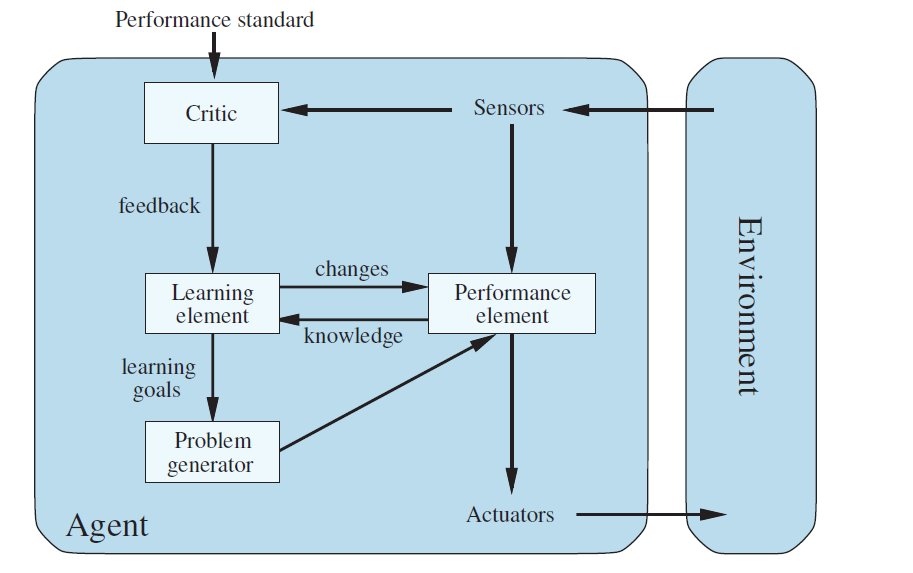

It has four main components inside the agent box:

- Performance Element

- Critic

- Learning Element

- Problem Generator

And two interfaces to the Environment:

- Sensors (input — to sense world)

Actuators (output — to act)

⚙️ Components of a Learning Agent

🟦 1️⃣ Performance Element – The “doer”

Function:

This is the part of the agent that actually interacts with the environment — it takes input (percepts) from sensors and decides what actions to take through actuators.

Arrow Flow:

Sensors ➜ Performance Element ➜ Actuators ➜ Environment

Example (Self-driving car):

Sensors detect: “Traffic signal is red.”

Performance Element decides: “Stop the car.”

Actuator performs braking action.

The car safely stops → environment changes (car is now stationary).

So, the Performance Element is the part of the agent that acts based on its current knowledge.

2️⃣ Critic – The “evaluator”

Function:

The Critic observes what the agent is doing and evaluates performance by comparing actual results with a Performance Standard (goal or benchmark).

Arrow Flow:

Sensors ➜ Critic

Performance Standard ➜ Critic ➜ Learning Element (Feedback)

Example:

- Performance Standard: “Complete each trip safely and within 30 minutes.”

- Sensors report: “Trip took 45 minutes; passenger was uncomfortable.”

- Critic evaluates this against the standard and concludes: “Performance is below expectation.”

- Sends feedback: “Driving too slow; braking too harsh.”

So, the Critic doesn’t control the agent — it only judges how well the agent is doing.

3️⃣ Learning Element – The “improver”

Function:

The Learning Element receives feedback from the Critic and updates the Performance Element so that future actions are better.

It modifies the agent’s internal knowledge or strategy.

Arrow Flow:

Critic ➜ Learning Element (Feedback)

Learning Element ➜ Performance Element (Changes/Updates)

Example:

- Learning Element receives feedback: “Driving is slow and jerky.”

- It adjusts rules or parameters — maybe it learns to accelerate more smoothly and anticipate traffic lights better.

- These changes are then applied to the Performance Element to improve next trip.

So, the Learning Element is like a teacher inside the agent — it keeps improving the “student” (Performance Element).

4️⃣ Problem Generator – The “explorer”

Function:

The Problem Generator helps the agent explore new and different actions — even risky ones — to gather new information and learn better strategies.

If the agent only sticks to what it already knows, it will never discover new or better methods.

Arrow Flow:

Learning Element ➜ Problem Generator (Learning Goals)

Problem Generator ➜ Performance Element (New Experiments)

Example:

- The self-driving car normally takes the main road.

- Problem Generator suggests: “Try a new route through side streets to learn if it’s faster.”

- The car tries it → learns it’s 5 minutes shorter.

- Learning Element stores this as new knowledge → Performance Element now prefers this route.

So, the Problem Generator is like a scientist inside the agent — it creates experiments to help the agent learn more.

Summary steps of Learning based agent

| Step | Arrow Direction | Description | Example (Self-Driving Car) |

| 1 | Environment → Sensors | Agent perceives world | Cameras detect traffic, road, signals |

| 2 | Sensors → Performance Element | Performance element receives data | Sees “Red signal” |

| 3 | Performance Element → Actuators | Takes action | Car brakes to stop |

| 4 | Actuators → Environment | Environment changes | Car is now stopped at red light |

| 5 | Sensors → Critic | Critic observes what happened | Observes time taken, comfort, safety |

| 6 | Performance Standard → Critic | Provides benchmark for comparison | “Trip should take 30 min safely” |

| 7 | Critic → Learning Element | Gives feedback | “Driving too slow” |

| 8 | Learning Element → Performance Element | Applies improvements | Learns smoother acceleration and optimal route |

| 9 | Learning Element → Problem Generator | Sets learning goals | “Test different speeds or routes” |

| 10 | Problem Generator → Performance Element | Suggests exploration | Tries alternate route next trip |

| 11 | Loop continues | Agent improves continuously | Becomes more skilled over time |

Why “Learning Agents” Are the Future

Learning agents are the most powerful and realistic AI systems today.

They are used in: