So far, our Goal-Based Agent sounds pretty smart, right?

It knows what it wants (the goal) and plans how to get there.

But wait… what if the agent has two different ways to reach the same goal? 🤔

For example —

Imagine you (the agent 😄) want to go to college.

- Route 1: Reach by bus — takes 30 minutes.

- Route 2: Reach by car — takes 10 minutes but costs more fuel.

Both achieve your goal — reaching college! 🎓

But which one is better?

That’s where our next hero enters — The Utility-Based Agent! 🦸♀️

This agent doesn’t just ask, “Can I reach my goal?”

It asks, “Which option gives me the best satisfaction or benefit?”

It measures happiness, comfort, speed, or efficiency — whatever matters most —

and chooses the most useful (utility) path.

So now, let’s meet this “smart decision-maker” —

the Utility-Based Agent, who knows how to make life easier and smarter! 💡

now imagine it’s Monday morning and you’re going to school 🏫.”

“There are two routes:

1️⃣ A short road… but full of traffic 🚗🚗🚗 — honking, dust, chaos!

2️⃣ A longer road… but smooth, quiet, and maybe even has a pani puri stall on the way 😋.”

Pause, then ask:

“So, which one will you choose?”

some will choose “short one!”, others “peaceful one!”

That’s where Utility-Based Agent comes in! 🧠✨

It doesn’t just want any goal — it wants the best one.

It checks which option gives more happiness, comfort, or benefit.”

“Basically, the Utility Agent says —

‘Why reach school early if I’ll arrive angry?’ 😤😂

It chooses the road that makes life better — not just shorter.”

“It’s like your brain is running Google Maps with feelings! 🥰”

“So a Utility-Based Agent doesn’t just ask,

‘Can I reach my goal?’

It asks,

‘Which way will give me the best result?’”

💡 Example Recap:

Goal-Based: Just wants to reach school.

Utility-Based: Wants to reach school happily and peacefully. 😎

🧩 1️⃣ What is a Utility-Based Agent?

A Utility-Based Agent is an improvement over a Goal-Based Agent.

Instead of only checking “Did I reach the goal or not?”,

it also checks “How good or bad is this outcome?”

So, it doesn’t just care about reaching the destination,

but also how comfortable, safe, or fast that journey was.

💡 Example:

Think of a self-driving car.

Its goal is simple: “Reach the destination.”

But there are many ways to reach it:

🚗 Drive fast but risk accidents,

🚗 Drive slow but stay safe,

🚗 Take a longer but scenic route.

A Goal-Based Agent only checks if it reached the destination.

A Utility-Based Agent checks which option is best overall — balancing safety, comfort, and time.

That’s why utility = measure of happiness or satisfaction (numerical value).

It helps the agent pick the best option, not just any working one.

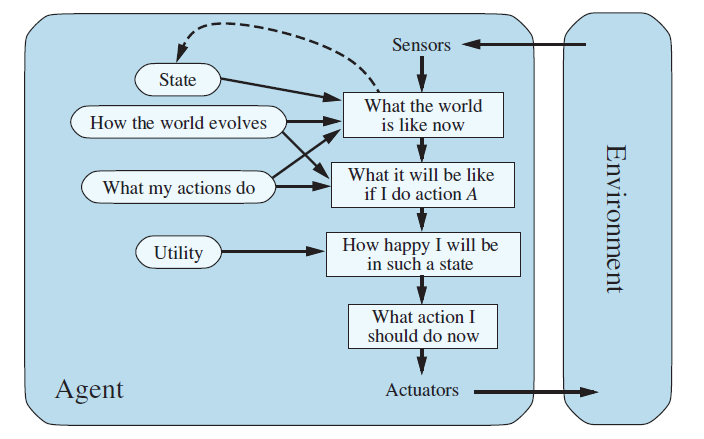

This diagram represents the working of a Utility-Based Agent, which is a more advanced and intelligent type of agent compared to the goal-based one. It not only tries to achieve goals but also measures how good or satisfying each possible outcome is.

🔹 Step 1: Sensors → “What the world is like now”

- Sensors collect information about the current environment.

- This is the percept — what the agent sees or senses right now.

🧭 Example:

Car sensors detect:

- Speed = 60 km/h

- Road ahead = wet

- Distance to car in front = 10 m

So, the agent’s current world state is: “Wet road, traffic ahead.”

🔹 Step 2: State + “How the world evolves” → What the world is like now

These two help the agent understand and predict:

“How things will change over time.”

“How my actions will affect the world.”

🧩 Example:

“If it rains, the road becomes slippery.”

“If I press the brakes hard on a wet road → car may skid.”

So, this helps the agent form an internal model of how the world behaves.

🔹 Step 3: “What my actions do” → “What it will be like if I do action A”

This step simulates outcomes of possible actions.

🧮 Example:

The agent considers:

- If I accelerate, will I get there faster or hit the car in front?

- If I brake, will I stop safely or skid?

This gives predicted results of each possible action.

→ It’s basically imagining the future outcome of each action.

🔹 Step 4: Utility → “How happy I will be in such a state”

Now the agent uses its utility function to evaluate each predicted outcome.

The utility function assigns a score (utility value) to each state based on how desirable it is.

Example:

| Possible Action | Predicted Outcome | Utility (Happiness) |

| Accelerate | Reach fast but risk collision | 0.4 |

| Brake gently | Safe, slightly slower | 0.9 |

| Brake hard | Stop but uncomfortable | 0.6 |

The higher the utility, the better the option.

🔹 Step 5: “What action I should do now” → Actuators

Finally, the agent chooses the action with the highest utility value

and sends it to the actuators to perform in the real world.

➡️ This is the decision-making step.

🧭 Example:

The agent decides: “Brake gently.”

Actuators (brake system) perform the action.

✅ Safe, smooth stop — best balance between time and safety.

Summary

| Step | Arrow Flow | Explanation | |

| 1️⃣ | Environment → Sensors | Agent perceives world | Detects rain, traffic |

| 2️⃣ | Sensors → What the world is like now | Current state | Wet road |

| 3️⃣ | State + How the world evolves → Predicts next state | Understands environment behavior | Wet road → risk of skid |

| 4️⃣ | What my actions do → What it will be like if I do action A | Predicts effect of actions | “If I accelerate → risk accident” |

| 5️⃣ | Utility → How happy I will be in such a state | Evaluates each outcome | Safe = 0.9, Risky = 0.4 |

| 6️⃣ | Choose best → What action I should do now | Decision step | Brake gently |

| 7️⃣ | Actuators → Environment | Executes action | Car stops safely |